Dear friend of GLEN World,

As we head into the holiday season, we want to take a moment to reflect and share the progress that we’ve made this year toward GLEN World’s mission.

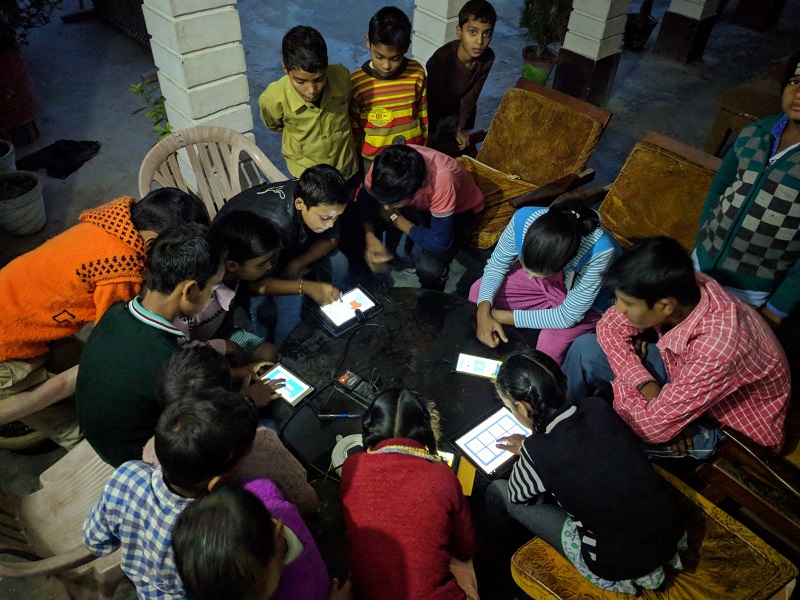

Our content continues to be discovered by learners around the globe thanks to our Google Ads grant. Our learner analytics indicate that around 98K learners have benefited from our content, having completed 777K learning activities and enjoyed 199K stories. With our YouTube channel, we’ve been able to reach additional learners. Most of our learners are from Asia and South America.

While the mix of top cities where our learners are from continues to vary, here are the top cities for Jan 2025 to Nov 2025.

Starting last year, we have made a sustained push to utilize generative AI (genAI) for our mission of promoting early literacy, with our YouTube channel serving as the medium for dissemination. The idea is to use genAI to generate text, audio and images/videos for relatively short YouTube videos on material that we feel will stimulate curiosity and engagement in children. Our experiments started with AI Tails, a series on animals aimed at younger children. Popular videos in this series include Where Do Frogs Come From? and Big Elephants, Big Families.

This year we have launched a new series called AI Explain, which we plan to use as an umbrella for high-quality nonfiction content in a diversity of areas spanning STEM, humanities and social sciences, targeting various stages of early literacy. The initial focus of AI Explain is Inventions and Discoveries, starting with cornerstones of human development such as The Wheel and Language.

Our cautious approach to utilizing AI was outlined in a November 2024 blog post announcing AI Tails, while the AI Explain initiative was announced in a September 2025 blog post. Our interactions with genAI got successively deeper as we gained experience using it and continued adapting to its ongoing advances. A couple of examples:

- When we started working on AI Tails, we were not convinced about the continuity and quality of AI-generated videos, and chose to use still images instead. In our prompts for the text narrative, we would sometimes have to ask the chatbot to adapt the narrative to work with still images. However, the quality of AI-generated videos has advanced significantly over the past year, so we decided to use short video clips for AI Explain, even though this does require more effort in terms of curation and iteration.

- We came up with many of the topics for the AI Tails series ourselves, but supplemented these with suggestions from the chatbot. For the AI Explain series, we started a level up, asking the chatbot to research effective methods for teaching English language skills to children in a video format. We then asked it to take this high-level research into account for each topic in the series when producing a script accompanied by prompts for generating images, along with prompts for generating animations for each image.

So, AI is telling us the high-level approach, generating the text narrative, generating the audio narration, generating images and animating them. But we humans bear the critical responsibility of enforcing quality and consistency, which is at times a tedious endeavor. While we have figured out prompting and cross-checking mechanisms to avoid inaccuracies in the narrative, the text is sometimes too breathlessly enthusiastic or emotionally exaggerated, and needs to be toned down. We also have to watch out for occasional quality drops that sometimes happen, for instance, as the underlying AI models are updated by the chatbot provider. Consistency in image/video generation in adherence to prompts remains a significant challenge, and requires a combination of editing and retries. Generating a satisfactory audio narration of the script is usually straightforward, although there are occasional artifacts that require a few iterations. Over months of experimenting with different tools and fine-tuning our content-generation workflows, we are at a stage where creating the script, audio, video sync and subtitling is not too painful. However, our decision to have a friendly robot narrator introduce each AI Explain video has meant that significant trial and error is needed to produce lipsync and animation for these introductory segments. In summary, we continue learning and evolving in order to harness rapid advances in AI for our mission, while remaining vigilant against its shortcomings and glitches.

We are sharing our experience to give you a concrete sense of the effort involved, as well as the potentially significant payoff in terms of generating large and diverse content libraries for early literacy. Our intent is to continue this journey, adapting our approach as we learn more to best serve our young learners. What do you think? We’d love to hear your feedback and your ideas.

From all of us at GLEN World and the children we serve, THANK YOU to the bigger GLEN World family of donors, supporters, and partners. If you are not already part of our community, please join us!

As you plan your gifts for your loved ones and causes this holiday season, please consider making a gift to help us help every child get ready for school. Donate now.

Have a wonderful holiday season, and a great 2026!

The GLEN World Team

GLEN World is a 501(c)3 nonprofit. If your employer has a donation match program, then that’s a great way to double your gift’s impact!